Why should citizens evaluate ethical decisions made by autonomous vehicles? An interview with Edmond Awad about six years „Moral Machine“ and the benefits of public engagement and gamification to foster trust in artificial intelligence.

“Engaging people in this discussion will increase trust”

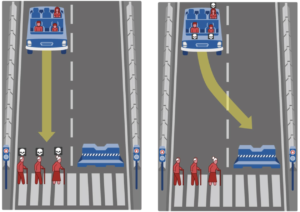

Mister Awad, Moral Machine is a science experiment designed to involve the public in the moral decisions that autonomous cars must make. In the game, players choose between two accident scenarios and decide which group of people the car should rather sacrifice. How did you get the idea to develop this game?

The whole project started when I was a student at the MIT. When I was joining the program, my advisor Iyad Rahwan had a project with Jean-François Bonnefon and Azim Shariff where they explored the ethics of autonomous vehicles, this was in 2015. They asked people for instance questions about which group a driverless car should sacrifice in case of an accident. They found that, for example, most people would prefer driverless cars to sacrifice their own passenger to save ten pedestrians.

We thought about how we could extend this research. Iyad Rahwan suggested to do a website, where we would be able to manipulate and explore many factors of a possible accident. So, we started basically with this kind of a dual goal. We wanted to collect data about many different accident scenarios and how they change and affect people’s decisions. Additionally, we wanted to raise awareness about the fact that machines become autonomous and have to make moral decisions.

Moral Machine

Moral Machine is an online platform for gathering a human perspective on moral decisions made by autonomous vehicles (AV). It presents users with moral dilemmas that are faced by AV. Since its deployment in June 2016, the website has received high publicity. This has produced the largest dataset on ethics of machines ever collected: 100 million decisions in ten languages from about eight million people in 233 countries and territories. The platform was created by Iyad Rahwan, Jean-François Bonnefon, and Azim Shariff; and developed by Edmond Awad , Sohan Dsouza, and Paiju Chang.

Not really. During the design and development phase we received helpful feedback from experts, which helped us to increase the popularity of the website and make it as interesting as possible. This forced us to make some decisions that were not ideal for a scientific experiment, but helped us reach more people. For example, we would have liked to know as much as possible about our participants. But we had to limit the number of questions and minimize the amount of text in the website to a minimum. When the website was launched, it received much more traffic than expected, so it crashed constantly for the first few weeks. In the years that followed, it also went viral several times, including a post about it making it to the front page of Reddit twice. It’s really hard to know what exactly made it so popular. I think the topic itself plays specifically a big role. People are really fascinated by driverless cars, especially at that time. The fact that they are becoming a reality, that machines are becoming autonomous and making decisions on their own – everyone has something to say about all of these things.

What benefits have you seen in using a game?

We defined two different goals for this game. We wanted to collect data for the purpose of a scientific study. A game provides many benefits to that. Because it provides a large sample which allows you to do a more complex design for your study. It allows you to vary multiple factors. This scientifically enables you to better estimate the effect of the different factors in a more robust way than traditional psychology studies. It also helps you understand how such effects can be moderated by other contextual factors. The other goal is public engagement. It enables people to experience themselves and they also feel included in the problem. Also, the gamification provides a more engaging experience.

You had to take a lot of criticism for this project. What did these critical voices say?

Many felt that a game was the wrong way to involve the public in these moral decisions, as many people on the internet are considered too biased and uninformed about the issue. There were also many online articles reporting on the site, with headlines like MIT is crowdsourcing moral decision making for self-driving cars. Which made it seem like our plan was to collect data and use it to program driverless cars by ourselves. Which is of course not what we are planning to do. Such clickbaity headlines made many people angry and they wrote articles criticizing the website for such goals.

Other parts of the critics said: the car will never face these rare scenarios – which is true. The truth is: The car will not face these specific dilemmas in real life. But they do face moral trade offs. How will the driverless car distribute the risk that it has? If the cars are driving safer than humans, maybe that is enough to have them on the road. But what if 80 percent of their crashes involve cyclists? Would that be acceptable? Even if they cause much lower crashes than humans, there might be something wrong about how they distribute risks. And I think this is part of the bigger discussion about how machines now being deployed in this responsible position and how they are changing the distribution of harm and benefits.

In the paper is written: “[… ] even if ethicists were to agree on how autonomous vehicles should solve moral dilemmas, their work would be useless if citizens were to disagree with their solution [… ]”. Why do you think the public perception is important in the communication about autonomous vehicles?

For instance, Germany was one of the first countries which had a committee for normative ethics. But the experts of this committee itself had disagreements as well. If you are trying to choose between two different defensible options you might use the public as a tie breaking rule for that. But there are also cases where you better ignore the public opinion, for instance in some countries people would give highest priority to the highest status people. But, for example many people would give priority to children. You might ignore that, but you have to frame that decision in a way that would be palatable to the public.