Anyone can publish a science story, but who makes sure that it’s accurate? Shama Sograte, co-founder of AuthentiSci, explains how scientists can help us make sense of complex details in public science communication.

Who checks the science in science reporting?

A widely cited Time magazine article claims the long extinct dire wolf is back. While many outlets picked up the story, some scientists on social media have disputed its claims. As a non-expert, I find it difficult to judge which party is right. How could AuthentiSci help me navigate this noise?

That’s exactly the kind of situation AuthentiSci was built for. There’s a lot of great science reporting out there, but also plenty of misinformation or oversimplified takes. AuthentiSci helps by giving scientists a way to directly assess and respond to articles like the one you mentioned.

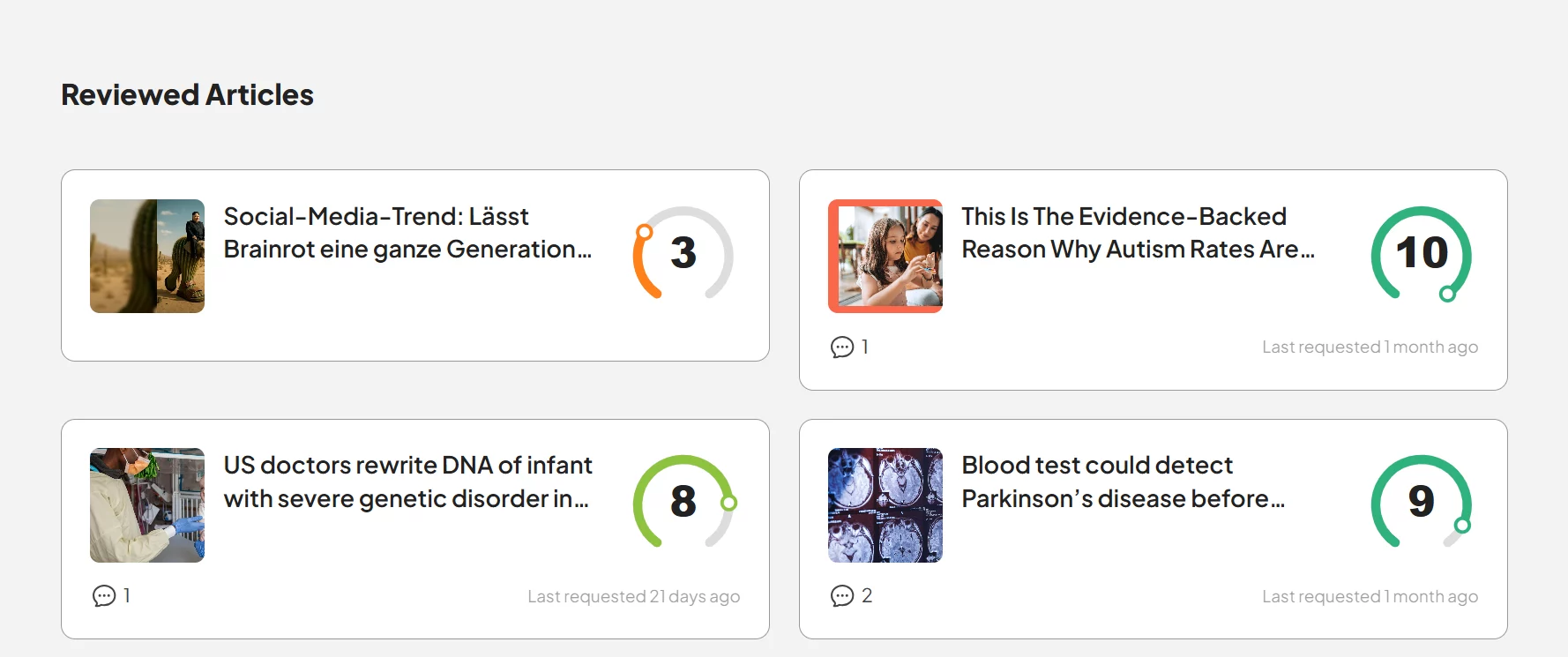

If you’re reading an article online, you can click the AuthentiSci button in your browser. If the article has been reviewed by scientists, you’ll see a score showing how well it aligns with current research. For example, if there’s peer-reviewed science supporting the claim, the score will be higher. If the journalist sticks closely to the evidence, even better. But if the article misrepresents or ignores the science, the score will reflect that.

The goal isn’t to say what’s absolutely true or false, but to show how closely the article reflects what scientists currently understand.

What do scientists get out of participating?

There’s no material reward. We don’t pay the scientists, so participation is completely voluntary. Our model is a bit like Wikipedia: it’s non-profit and crowdsourced, and people contribute because they care about sharing knowledge.

All of the founders are scientists. We’ve seen that many in the scientific community really want better ways to communicate with the public. There’s a lot of frustration when a scientist’s message doesn’t come through, or when it gets distorted in the media.

Many researchers actually want to engage with the public, but they don’t always have the right tools. AuthentiSci gives them a direct way to help set the record straight and improve how science is communicated.

What does the process look like for scientists?

I usually recommend starting with articles related to your own expertise. Since you’re already an expert, those are quick to review and it helps ensure your field of work is accurately represented to the public. The tool itself is designed to be simple and fast. There’s a clear scoring system, and if you’re familiar with the topic, it only takes a few clicks to give a rating.

There’s also space for comments, which we strongly encourage, especially when there’s more context to add or if the article leaves out important perspectives from the field. For example, an article might be well-written and scientifically sound, but it might ignore other relevant theories. Scientists can use the comments to fill in those gaps for readers.

Can you explain how the criteria of your scoring system work?

The first criterion is evidence. We look at how strongly the article is backed by scientific research. Is it based on a study? Has the study been peer-reviewed or is it just a pre-print? The goal is to evaluate whether the claim is actually supported by science.

The second is balance. This checks whether different viewpoints are fairly represented, and whether the article shows signs of political or financial bias. It also picks up on unintentional misinterpretations, which can happen even if the journalist didn’t mean to distort anything.

The third is clarity, so how well the article communicates to a general audience and how well that matches the evidence. If the message is too complex or vague, it can easily lead to confusion or misinterpretation.

These three scores are averaged into an overall ranking that’s easy for readers to understand. But just as important is the comment box, where reviewers can explain their scores, add context, or point out nuances the article missed.

What motivated you to start the platform in the first place? Was there a particular moment that pushed you to act?

Scientific misinformation and fake news have been around for a long time, but what really gave us the biggest push was COVID. Everyone was panicking and searching for scientific information to follow, but there were such big waves of information all over the place that it became very hard to trust anything.

Around that time, we met at the Lindau Nobel Laureate Meeting, which brings together young scientists and Nobel Laureates to discuss science and the future of society. That’s where we connected and later teamed up for a hackathon.

We decided to create AuthentiSci and built the first minimal viable product. It was a 24-hour contest, and we won second or third prize. That gave us the motivation to keep going. We got support from the Max Planck Society, which gave us the initial funding – and hopefully, that will turn into a long-term partnership.

The articles you review vary a lot in terms of length, depth, and overall quality. Don’t respected outlets like The Guardian or BBC already provide a sort of heuristic for good science reporting?

Absolutely, there’s some excellent, reliable science journalism out there. AuthentiSci isn’t here to produce journalism ourselves. The goal is to give scientists a way to communicate directly with the public. That means they can flag inaccurate information, but also highlight great reporting.

If certain outlets consistently earn high scores, readers might start to see them as more trustworthy – almost like getting a “scientist-approved” label. We’re not trying to judge journalists. It’s more about adding a layer of insight. A space where scientists can comment, give context, or point out what’s missing. It opens up the article for deeper understanding and discussion.

Have authors or media outlets responded to receiving a low score? Have you seen cases where they’ve revised their content as a result?

Not yet. Right now, we’re still building up our community and gathering enough material. While we have some active reviewers, we need more to help us target as many articles as possible. It’s a huge task, given the massive amount of information out there.

But if you look at Wikipedia – millions of articles in all languages – it shows that the power of the crowd can lead to incredible results. I believe AuthentiSci can achieve something similar, helping us improve the content that is available about science over time.

What kind of feedback have you received from the public – especially those without a science background? Are people engaging with the platform as you hoped?

So far, we haven’t actively publicized the tool to the general public, as we’re still focused on building our scientific community. However, at science festivals, where we have engaged with a wider audience, the feedback has been overwhelmingly positive. In general, people are excited and looking forward to using the platform.

There’s a clear need for a tool like AuthentiSci. People often struggle with navigating complex scientific content and technical jargon. While many want to access scientific papers, paywalls remain a major barrier – though that’s a whole other issue.

We now have a feature that allows users to suggest articles for review. These articles are then matched with scientists who can provide expert ratings.

AuthentiSci is currently run mostly by volunteers and a small leadership team. What would scaling up look like?

Scaling up would involve expanding our team to handle both the technical and organizational aspects. This includes managing the software and securing funding, as we are a nonprofit and want to remain that way. We’ll rely on donors, public funding, and other sources. And securing that support is a full-time job in itself. We also plan to increase our outreach with the general public and raise awareness, as well as collaborate with other like-minded non-profits.

Additionally, we’d need more people to help coordinate the scientific community – to help grow a strong community that has a voice through AuthentiSci. Unfortunately, the scientific community isn’t always as loud or as charismatic as those spreading misinformation online. As a result, it’s easy for those voices to dominate, making it all the more important for us to amplify the voices of scientists through our platform.